Install Ntp On Solaris 11 Repository

Table of Contents

How to automate system configuration across your heterogeneous data center.

For example, on a default Oracle Solaris 11 installation, only datasets under rpool/ROOT/BEname/. Create and publish IPS packages to a package repository. Java Project Tutorial - Make Login and Register Form Step by Step Using NetBeans And MySQL Database - Duration: 3:43:32. 1BestCsharp blog 4,262,749 views.

About Puppet

Puppet is a popular open source configuration management tool that's now included with Oracle Solaris 11.2. Using a declarative language, administrators can describe the system configuration that they would like to apply to a system or a set of systems, helping to automate repetitive tasks, quickly deploy applications, and manage change across the data center. These capabilities are increasingly important as administrators manage more and more systems—including virtualized environments. In addition, automation can reduce the human errors that can occur with manual configuration.

Puppet is usually configured to use a client/server architecture where nodes (agents) periodically connect to a centralized server (master), retrieve configuration information, and apply it. The Puppet master controls the configuration that is applied to each connecting node.

Overview of Using Puppet to Administer Systems

This article covers the basics of administering systems with Puppet using an example of a single master (master.oracle.com) and single agent (agent.oracle.com). To learn more about Puppet, including more-advanced configuration management options, check out the Puppet 3 Reference Manual.

Installing Puppet

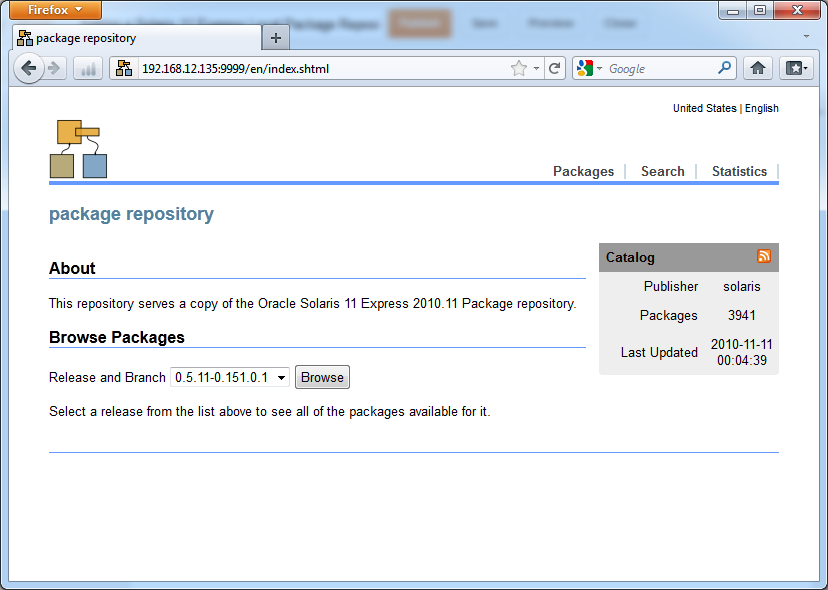

Puppet is available through a single package in the Oracle Solaris Image Packaging System repository that provides the ability to define a system as either a master or an agent. By default, the package is not installed with the Oracle Solaris 11 media, as indicated by the output shown in Listing 1 from using the pkg info command to query the system:

Listing 1

To install the Puppet package, use the pkg install command, as shown in Listing 2:

Listing 2

Configuring Masters and Agents

Now that we've installed Puppet, it's time to configure our master and agents. In this article, we will use two systems: one that will act as the Puppet master and another that will be an agent node. In reality, you may have hundreds or thousands of nodes communicating with one or more master servers.

When you install Puppet on Oracle Solaris 11, there are two services that are available, as shown in Listing 3: one service for the master and another service for agents:

Listing 3

Puppet has been integrated with the Oracle Solaris Service Management Facility configuration repository so that administrators can take advantage of a layered configuration (which helps preserve configuration during updates). Service Management Facility stencils provide seamless mapping between the configuration stored in the Service Management Facility configuration repository and the traditional Puppet configuration file, /etc/puppet/puppet.conf.

The first step is to configure and enable the master service using the Service Management Facility:

Listing 4

As you can see in Listing 4, the Puppet master service is now online. Let's switch over to the node that will be controlled by the master and configure it. We will set the value of config/server to point to our master, as shown in Listing 5:

Listing 5

Once we have done this, we can test our connection by using the puppet agent command with the --test option, as shown in Listing 6. More importantly, this step also creates a new Secure Sockets Layer (SSL) key and sets up a request for authentication between the agent and the master.

Listing 6

If we move back to our master, we can use the puppet cert list command to view outstanding certificate requests from connecting clients:

Listing 7

In Listing 7, we can see that a request has come in from agent.oracle.com. Assuming this is good, we can now go ahead and sign this certificate using the puppet cert sign command, as shown in Listing 8:

Listing 8

Let's return to the agent again and retest our connection to make sure the authentication has been correctly set up, as shown in Listing 9:

Listing 9

And finally, let's enable the agent service, as shown in Listing 10:

Listing 10

Puppet Resources, Resource Types, and Manifests

Puppet uses the concept of resources and resource types as a way of describing the configuration of a system. Examples of resources include a running service, a software package, a file or directory, and a user on a system. Each resource is modeled as a resource type, which is a higher-level abstraction defined by a title and a series of attributes and values.

Puppet has been designed to be cross-platform compatible such that different implementations can be created for similar resources using platform-specific providers. For example, installing a package using Puppet would use the Image Packaging System on Oracle Solaris 11 and RPM on Red Hat Enterprise Linux. This ability enables administrators to use a common set of configuration definitions to manage multiple platforms. This combination of resource types and providers is known as the Puppet Resource Abstraction Layer (RAL). Using a declarative language, administrators can describe system resources and their state; this information is stored in files called manifests.

To see a list of resource types that are available on an Oracle Solaris 11 system, we can use the puppet resource command with the --types option:

Listing 11

As you can see in Listing 11, there are 72 resource types that are available on this system currently. Many of these have been made available as part of the core Puppet offering and won't necessarily make sense within an Oracle Solaris 11 context. However, having these resource types means that you can manage non-Oracle Solaris 11 systems as agents if you wish. We can view more information about those resource types using the puppet describe command with the --list option, as shown in Listing 12:

Listing 12

Querying a System Using Resource Types

Before we dive into configuring systems, let's use Puppet to query a system based on the resource types we saw in Listing 11 and Listing 12. We will use the puppet resource command with the appropriate resource type. The puppet resource command converts the current system state into Puppet's declarative language, which can be used to enforce configuration on other systems. For example, let's query for the service state of our system by using the service resource type:

Listing 13

In Listing 13, we can see a list of individual resources identified by their service names. Within each resource, there are two attributes—ensure and enable—and there is a value associated with them. This is the heart of Puppet's declarative language. A resource can be described as shown in Listing 14:

Listing 14

Each resource_type has a title, which is an identifying string used by Puppet that must be unique per resource type. Attributes describe the desired state of the resource. Most resources have a set of required attributes, but also include a set of optional attributes. Additionally, each resource type also has a special attribute, namevar, which is used by the target system and must be unique. If namevar is not specified, it often defaults to title. This is important when you are planning to manage multiple platforms. For example, suppose you want to ensure that the NTP service was installed on both Oracle Solaris 11 and Red Hat Enterprise Linux. The resource could be consistently titled as ntp, but service/network/ntp could be used for namevar on Oracle Solaris 11 and ntpd could be used for namevar on Red Hat Enterprise Linux.

Now let's use the zone resource type to query for the current state of the global zone and any non-global zones that have been configured or installed on the master:

Listing 15

In Listing 15, we can see that we do not have any non-global zones on this system; we just have the global zone.

Finally, let's take a look at what Image Packaging System publishers have been configured by looking at the pkg_publisher resource type, as shown in Listing 16:

Listing 16

Performing Simple Configuration with Puppet

Now that we have set up a Puppet master and agent and learned a little about resource types and how resources are declared, we can start to enforce some configuration on the agent.

Puppet uses a main site manifest—located at /etc/puppet/manifests/site.pp—where administrators can centrally define resources that should be enforced on all agent systems. As you get more familiar with Puppet, the following approach is recommended:

- Use

site.pponly for configuration that affects all agents. - Split out specific agents into separate Puppet classes.

We won't cover the use of Puppet classes in this article, but we will use site.pp to define our resources.

For simplicity, let's take a look at the file resource type. Let's modify /etc/puppet/manifests/site.pp and include the following resource declaration:

Listing 17

The declaration shown in Listing 17 uses the file resource type and two attributes—ensure and content—to ensure that a custom-file.txt file exists in the root directory on the agent node and that the file includes the content 'Hello World.'

Once we have saved the /etc/puppet/manifests/site.pp file, we can test that it is valid by using the puppet apply command. We'll use the -v option to increase the verbosity of the output and the --noop option to ensure that no changes are made (in essence, to do a dry run), as shown in Listing 18:

Listing 18

We could choose to apply this resource to the master itself by removing the --v and --noop options, running puppet apply again, and then checking for the existence and contents of the custom-file.txt file, as shown in Listing 19:

Listing 19

By default, agents contact the master server in 30-minute intervals (this can be changed in the configuration, if required). We can check that Puppet has enforced this configuration by looking to see whether the custom-file.txt file has appeared and checking the Puppet agent log located at /var/log/puppet/puppet-agent.log:

Listing 20

As shown in Listing 20, the configuration enforcement succeeded and the file was created on the agent system.

Using the Facter Utility

Puppet uses a utility called Facter to gather information about a particular node and send it to the Puppet master. This information is used to determine what system configuration should be applied to a node. To see some 'facts' about a node, we can use the facter command, as shown in Listing 21:

Listing 21

To list all facts for a node, we can use the -p option:

Listing 22

As shown in Listing 22, a variety of different facts can be queried for on a given system. These facts can be exposed within resources as global variables that help programmatically decide what configuration should be enforced.

As an example, let's see how we could declare a file resource to populate a file with different content on different platforms. We will use the osfamily fact to detect our platform:

Listing 23

In Listing 23, we create a new variable called $file_contents and provide a conditional check using the osfamily fact. Then, depending on the platform type, we assign different contents to our file.

Matching Configuration to Specific Nodes

When managing configuration across a variety of systems, administrators might want to provide some conditional logic to control how a node gets matched to an appropriate configuration. For this task, we can use the node keyword within our manifests. For example, if we wanted to match the configuration for a specific host called agent1.oracle.com, we could use what's shown in Listing 24:

Listing 24

Or, to match against agent1.oracle.com and agent2.oracle.com but provide a separate resource definition for agent3.oracle.com, we could use what's shown in Listing 25:

Listing 25

The use of default as a node name is special, allowing for a fallback configuration for nodes that don't match other node definitions. You can define a fallback as shown in Listing 26:

Listing 26

Using Puppet for Oracle Solaris 11 System Configuration

Now that we've covered the basics of Puppet, let's look at the specific resource types and providers that have been added to enable the administration of Oracle Solaris 11.2 systems. These resource types provide administrators with the ability to manage a wide range of Oracle Solaris technologies, including packaging, services, ZFS, Oracle Solaris Zones, and a diverse set of network configurations.

Managing Oracle Solaris Zones

We'll start by taking a look at the zone resource type. To get a better understanding of what attributes can be set, we'll use the puppet describe command again to look at the documentation:

Listing 27

In Listing 27, we can see that one of the attributes is called zonecfg_export, and it gives us the ability to provide a zone configuration file. Let's quickly create one using the zonecfg command, as shown in Listing 28. We'll call the zone testzone for now, but this will be configurable when we use the zone resource type.

Listing 28

We can now define our zone within our manifest as follows:

Listing 29

In Listing 29, you'll notice that we provided a value of /tmp/zone.cfg for our zone configuration file, and we set the ensure attribute to installed. The value of ensure matches the zone states configured, installed, and running. In this case, we'll be creating a zone called myzone on the agent node. Once we have applied the configuration, we'll wait to see what happens on the node:

Listing 30

As we can see in Listing 30, our zone is now configured and installed, and it is ready to be booted.

Adding Software Packages

Now we'll use the package resource type to add a new software package using the Image Packaging System.

Let's first confirm that the package is not currently installed on the agent node, as shown in Listing 31:

Listing 31

The resource definition is simple for this. We simply set the title to nmap and ensure that package is present, as shown in Listing 32:

Listing 32

After a short while, we can check our agent node to ensure the package has been correctly installed, as shown in Listing 33:

Listing 33

Earlier, we mentioned that Puppet enforces configuration. So let's try to remove the package from the agent node:

Listing 34

As Listing 34 shows, the package is no longer installed. But, sure enough, when the agent node contacts the master after a short period, the package is reinstalled, as shown in Listing 35:

Listing 35

Creating ZFS Datasets

We'll take the simplest example for enforcing the existence of a ZFS dataset by using the following resource definition with the zfs resource type. We'll also set an additional attribute called readonly to on, as shown in Listing 36:

Listing 36

We can quickly confirm that a new ZFS dataset has been created and the readonly dataset property has been set, as shown in Listing 37:

Listing 37

Performing Additional Configuration Using Stencils

As mentioned previously, all of Puppet's configuration is managed through Service Management Facility stencils. You should avoid directly editing /etc/puppet/puppet.conf, because such edits will be lost when the Puppet Service Management Facility services are restarted. Instead, configuration can be achieved by using svccfg and the configuration properties defined in puppet.conf(5), as shown in Listing 38:

Listing 38

Using the Service Management Facility to store Puppet configuration also makes it very easy to configure Puppet environments, which are a useful way of dividing up your data center into an arbitrary number of environments.

For example, you could have the Puppet master manage configurations for development and production environments. This is easily achieved by creating new Service Management Facility instances of the Puppet service, as shown in Listing 39:

Listing 39

Summary

Puppet is an excellent tool for administrators who want to enforce configuration management across a wide range of platforms in their data centers. This article briefly touched on a small fraction of the capabilities of Puppet. With support for a new set of Oracle Solaris 11–based resource types—including packaging, service configuration, networking, virtualization, and data management—administrators can now benefit from the type of automation they have achieved on Linux-based platforms previously.

See Also

Here are some Puppet resources:

- Puppet Configuration Reference

And here are some Oracle Solaris 11 resources:

- Download Oracle Solaris 11

- Access Oracle Solaris 11 product documentation

- Access all Oracle Solaris 11 how-to articles

- Learn more with Oracle Solaris 11 training and support

- See the official Oracle Solaris blog

- Check out The Observatory blogs for Oracle Solaris tips and tricks

- Follow Oracle Solaris on Facebook and Twitter

About the Author

Glynn Foster is a principal product manager for Oracle Solaris. He is responsible for a number of technology areas including OpenStack, the Oracle Solaris Image Packaging System, installation, and configuration management.

Configuring Network Time Protocol (NTP)

This section contains the following procedures:

How to Use Your Own /etc/inet/ntp.conf File

- Assume the root role on a cluster node.

- Add your /etc/inet/ntp.conf file to each node of the cluster.

- On each node, determine the state of the NTP service.

- Start the NTP daemon on each node.

- If the NTP service is disabled, enable the service.

- If the NTP service is online, restart the service.

Next Steps

Determine from the following list the next task to perform that applies to your clusterconfiguration. If you need to perform more than one task from this list, go to the first of thosetasks in this list. Calculus alternate 6th edition larson hostetler edwards pdf to jpg.

If you want to install a volume manager, go to Configuring Solaris Volume Manager Software .

If you want to create cluster file systems, go to How to Create Cluster File Systems.

To find out how to install third-party applications, register resource types, set up resourcegroups, and configure data services, see the documentation that is supplied with the applicationsoftware and the Oracle Solaris Cluster 4.3 Data Services Planning and Administration Guide.

High-quality royalty-free E-Mu Proteus/2 Tubular Bell C5 sample in.wav format for free download. Percussion Samples, Glockenspiel Loops, Gong Sounds, Tubular Bells Samples, World Samples at Loopmasters.com. The free tubular bells loops, samples and sounds listed here have been kindly uploaded by other users. If you use any of these tubular bells loops please leave your comments. Read the loops section of the help area and our terms and conditions for more information on how you can use the loops. High-quality royalty-free Alesis Fusion Tubular Bells C6 sample in.wav format for free download. Tubular bells wav sample. Download high-quality royalty-free samples in.wav format for free.

When your cluster is fully configured, validate the configuration. Go to How to Validate the Cluster.

Before you put the cluster into production, make a baseline recording of the clusterconfiguration for future diagnostic purposes. Go to How to Record Diagnostic Data of the Cluster Configuration.

How to Install NTP After Adding a Node to a Single-Node Cluster

When you add a node to a single-node cluster, you must ensure that the NTP configuration filethat you use is copied to the original cluster node as well as to the new node.

- Assume the root role on a cluster node.

- Copy the /etc/inet/ntp.conf and/etc/inet/ntp.conf.sc files from the added node to the original clusternode.

These files were created on the added node when it was configured with the cluster.

- On the original cluster node, create a symbolic link named/etc/inet/ntp.conf.include that points to the/etc/inet/ntp.conf.sc file.

- On each node, determine the state of the NTP service.

- Start the NTP daemon on each node.

- If the NTP service is disabled, enable the service.

- If the NTP service is online, restart the service.

Next Steps

Determine from the following list the next task to perform that applies to your clusterconfiguration. If you need to perform more than one task from this list, go to the first of thosetasks in this list.

If you want to install a volume manager, go to Configuring Solaris Volume Manager Software .

If you want to create cluster file systems, go to How to Create Cluster File Systems.

To find out how to install third-party applications, register resource types, set up resourcegroups, and configure data services, see the documentation that is supplied with the applicationsoftware and the Oracle Solaris Cluster 4.3 Data Services Planning and Administration Guide.

When your cluster is fully configured, validate the configuration. Go to How to Validate the Cluster.

Before you put the cluster into production, make a baseline recording of the clusterconfiguration for future diagnostic purposes. Go to How to Record Diagnostic Data of the Cluster Configuration.

How to Update NTP After Changing a Private Hostname

- Assume the root role on a cluster node.

- On each node of the cluster, update the /etc/inet/ntp.conf.sc file withthe changed private hostname.

- On each node, determine the state of the NTP service.

- Start the NTP daemon on each node.

- If the NTP service is disabled, enable the service.

- If the NTP service is online, restart the service.

Next Steps

Determine from the following list the next task to perform that applies to your clusterconfiguration. If you need to perform more than one task from this list, go to the first of thosetasks in this list.

If you want to install a volume manager, go to Configuring Solaris Volume Manager Software .

If you want to create cluster file systems, go to How to Create Cluster File Systems.

To find out how to install third-party applications, register resource types, set up resourcegroups, and configure data services, see the documentation that is supplied with the applicationsoftware and the Oracle Solaris Cluster 4.3 Data Services Planning and Administration Guide.

When your cluster is fully configured, validate the configuration. Go to How to Validate the Cluster.

Before you put the cluster into production, make a baseline recording of the clusterconfiguration for future diagnostic purposes. Go to How to Record Diagnostic Data of the Cluster Configuration.